Fear of failure can slow down a company, and in information technology, speed to market is critical. Traditionally, companies have tried to get it right first time and spent a lot of time and money working on pre-release activities like manual testing. This front-loading of risk mitigation not only slows down a company's ability to build and releases features and products, but when, not if, a failure happens in production rectification is usually slow and expensive.

How to lower the cost of failure in IT? Firstly, implement tools and processes for business continuity in the event of failure. Then make sure you can easily, quickly and reliably move code from development into production. Then ensure there is enough monitoring and alerting in place to quickly notify the team if issues are occurring in production. Finally, make sure that there are mechanisms in place to easily roll back deployments in case they are not successful.

The technology landscape changes continuously, and often the only way to know what is going to work is to try it. Lowering the cost of failure enables companies to experiment and learn about their customers, thereby allowing them to build a successful product that solves their customers' needs.

Plan for Failure

I have found in numerous companies that planning for failure is neglected or given cursory consideration. There is usually a considerable amount of time and expense invested in development and pre-release validation, but working out how to manage a failure of the system is ignored, neglected or forgotten.

There is an attitude that if we invest enough resources and work smart, we can prevent harmful code or a bad feature from reaching production. This attitude is naive at best and delusional at worst. New IT products are incredibly complex internally and rely more and more on external systems. Guaranteeing a new piece of code will behave as expected in the wild is impossible.

So the bad news is that failures are inevitable. The good news is with some planning and sensible design decisions; they don't have to be fatal. If an organisation gets good at managing and learning from failures, it can become more resilient to failure and avoid significant failures.

Planning for failure means that we consider:

- How would we know if the system/component was failing? (relying on complaints from customers is not a good look)

- How would we diagnose the issue? Does the system output enough relevant and contextual information to easily accessible logs?

- Is data being backed up?

- Can we spin up another environment with backed up data? How long does that take?

- While the system is down, how can the company continue to function, i.e. take orders, receive goods, field requests etc.

- When the system comes back online, how is the system going to catch up? i.e. orders, goods received, and other transactions that were recorded manually while the system was down, now need to be inputted into the system. How long will that take?

- Who is doing what during an incident?

- How and when will the incident be escalated/de-escalated? What will happen when an incident changes severity?

- Once an incident has been resolved, how will the postmortem and related activities be conducted and when?

Being good at planning for, managing, learning and adapting to small failures can innoculate you against fatal failures and also improve the quality of your product.

Rolling Forwards

A company must have the technologies and processes in place to be able to easily, quickly and reliably move finished code from a developer's machine to production. Setting up this pipeline for the completed code is vitally important. Once it has been established, it can then be enriched and expanded as needed.

Initially, the pipeline may be as simple as a code repository hooked up to a build server, that only tries to compile the code and produces an artifact like a JAR, WAR, RPM or ZIP file. The artifact can then be manually deployed to production. Company policy must now only allow the deployment of artifacts produced by the build server into production.

Now that there is a single path into production, more manual and automated gates can be introduced into the pipeline. You might create an automated test suite and also introduce a manual approval step for any newly built artifact. Only artifacts that have passed the automated test suite and been reviewed by the test team (approved) can be deployed.

Since the test team only needs to look at artifacts that have passed automated testing, as the automated test suite grows and detects more issues, the test team has fewer artifacts to review. Eventually, some artifacts will have a rigorous enough automated test suite that the test team rarely finds an issue, and the approval step can be removed for that artifact.

The pipeline can also be expanded to include the automatic deployment of an artifact into production. So instead of a human needing to copy files and run commands, automate the process and wire it into the pipeline. As the pipeline does more of the heavy lifting, the time it takes to deploy changes can be dramatically reduced, and the more deployments can occur.

Rolling Backwards

Rolling forwards is excellent, and if there is an issue in production, being able to roll out a fix quickly is very helpful. However, it takes time to work out what is wrong, how to fix it and then to implement a quality fix, in the meantime, the system/component is down, and people are screaming.

It is essential to think about how any changes pushed into production can be rolled back. Rolling back code can be hard, especially if the systems and architecture haven't been designed with rollbacks in mind.

Rolling back code is not just about working out how to replace the code with a previous version; you also need to consider:

- how/if to revert the database schema?

- what to do with data generated by defective code and stored in the database?

- what to do about data generated by other parts of the system based on information provided by the defective code?

As complex as this sounds, if one thinks about how to rolling back their code as they write it, they can significantly simplify the process. Database schema changes can be implemented in rollback friendly patterns. Components can be loosely coupled to encourage separation of concerns, thereby reducing the blast radius of defective code and allowing for easier rollback.

There are also well-established patterns and tools for helping to deal with poisoned data like Data Lineage. Data Lineage illustrates the point that thinking about managing failures of your software can help build a more resilient overall system. Bad data may come from a defective deployment but could also come from external systems too. Considering nearly all systems rely on data from external systems, being able to handle poisoned data gracefully is a distinct advantage.

Monitoring, Alarming and Recovery

One has to answer "How will we know if the system is not working?" and then "What impact would that issue have on the business?" and then "How will we diagnose an issue with the system at 3 am with people screaming?"

Too often the only users of a system that are considered are the end customers. However, customers are not the only users of a system. Other users may include:

- Operations Team

- Customer Service Team

- Back Office Team

Monitoring

Operations Team need to be able to monitor the system/component and know when there is a problem, well before someone in customer service rings them up and tells them people are complaining. Does the new code emit log messages at appropriate levels (INFO, WARN, ERROR, FATAL) and with sufficient details?

A developer needs to ask "Would this log message give someone enough information to understand what the problem is and how to fix it even if they didn't understand the component well?" Unless the developer wants to be woken up at 3 am to fix the issue, if they are still at the company/team, then they need to make sure some else can diagnose and fix the issue based on the information they provide.

Customer Service Team needs to be informed ASAP of any system failures and how that might affect customers, so they can field call appropriately, and possibly escalate to account managers if major customers are impacted significantly.

So a dashboard that reads "Voyager component down" isn't as helpful as "Voyager component down - Credit card payment gateway is NOT available."

Monitoring is not just seeing if services are up/down and ports are open. Monitoring should also be answering "Is business function X working correctly?" Synthetic monitoring are scripts that emulate a user of a system and answer questions like "Can customers see the catalogue?" or "Can customers place an order?"

Back office team need to know if any component they need to do their job is down. There are parts of the system that are critical but not customer-facing, like claims processing, warehouse receiving and order management. The back office team may also need to organise staff and resources when a system has recovered, i.e. they may need to organise staff to input received goods into the system, which are currently being processed manually while the system is offline.

Alarming

Getting alarms working well takes time and tuning. You need to consider:

- When to raise an alarm.

- Who needs to be notified.

- How they should be notified

- When/how to escalate an alarm.

- How an alert/alarm becomes an incident.

Alarms can be raised when a single event happens, i.e. a service is down, or they can be raised only when a series of events occur within a set period, i.e. web servers have high memory and CPU usage but low network usage (something smells bad).

When an alarm is raised, ideally, the person who gets the alarm can either a) resolve the issue or b) understand who needs to be called in to resolve the issue. When handling an alarm, non-technical people may also need to be involved, i.e. someone from marketing may need to put updates on social media platforms.

How an alarm is raised needs to be considered. If it is 3 am, sending someone an email to respond to a critical alarm will probably not get them out of bed. On the other hand, you don't want to have a robot phone call pulling them out of bed at 3 am for a minor alarm.

The duty of responding to alarms needs to be shared; otherwise, people will get burnt out. When working out a roster, it is important also to identify escalation points and contacts. The primary duty person may be busy resolving an existing alarm or unavailable, so a secondary needs to listed who can also field alarms.

Not all alarms result in incidents, and if an incident wasn't raised by an alarm discuss in the postmortem. Understanding when an alarm should be escalated to an incident and at what severity is essential to decide and clarify before an incident happens.

Recovery

Generally, there are two states of system recovery after a failure. Initially, the system needs to be brought back to a state where it is available. So, for example, the system taking care of accepting customer orders is now back up and can handle new orders. Having restored the order system is great and will relieve some pressure, it is not over yet.

The system also needs to be fully restored. While the order system was down, the business continued, and orders were either taken manually, or sales have a list of people to call. Until the old orders have been uploaded into the system, the system has not been fully restored. Customer can't enquire online about orders that are sitting on a piece of paper.

Another aspect of recovery is that of automated recovery. Automated recoveries may be triggered by internal activities like deployments or external factors like increases in activity/load.

If we have automated deployment of code (roll forward) and automated rollback of code, and we have adequate monitoring. Then we can craft a solution that can detect if a deployment has caused issues and then attempts to roll back the deployment automatically. It should be noted that not all deployments will have a clean rollback process so developers might need to mark which deployments are safe to roll back automatically.

With cloud-based platforms, we can script recovery routines. So if we detect that we may need more web servers to handle the increased load, we can have the recovery run automatically. One needs to be careful and ensure there are hard limits otherwise you may be paying a fortune to scale up a Denial of Service attack :-)

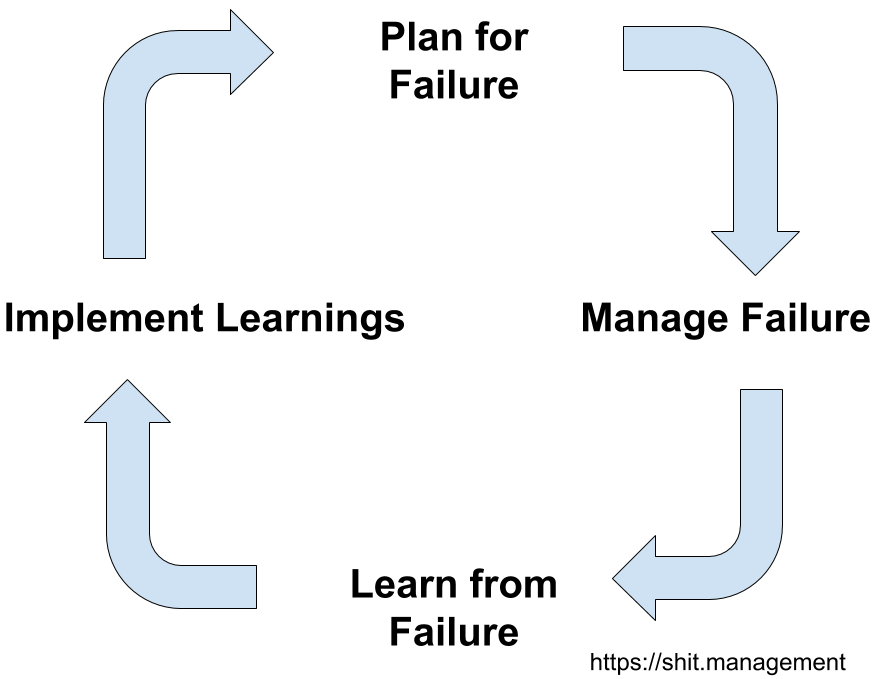

The Virtuous Cycle of Managing Failure

With proper planning and processes, smooth and automated deployments, adequate and trustworthy monitoring and alerting, and roll back tooling and processes that work the cost of failures can be kept in check.

When something goes wrong people don't have to panic. Everyone knows what they need to do, and if things don't go smoothly, there is now a framework to store this tribal knowledge for next time. Having a framework for managing failure is very powerful because people can shift into proactive problem-solving mode rather than drowning in panic.

Even better, as you get good at detecting and recovering from failure, you may find that you can recover from a failure before customers notice and if a tree falls in the forest and no-one has heard it...

If failure is no longer fatal and is becomes quite cheap, then we can be more courageous, try new things, and when we do fail, dust ourselves off and try again saying "Interesting, learned something new."

"Would you like me to give you a formula for... success? It's quite simple, really. Double your rate of failure." Thomas J. Watson

Related Questions

How can we measure failure and recovery in IT? To understand if we are getting better at recovering from failure, we can measure our MTTR (Mean Time to Repair/Restore). As we get better at repairing (bringing a system back online) and restoring (getting a system back to a pre-failure state with offline work uploaded) we should see our MTTR go down. We can also keep track of our MTBF (Mean Time Between Failure), which should increase as we mature.

Should we be using time as a variable when calculating MTBF? Unless your system has a consistent load and runs 24/7 then maybe measuring Mean Time Between Failure is the most useful metric. Measuring a transactional activity may give a more useful picture, i.e. Mean Number of Orders Between Failure.